Scientists are working on a brain-computer interface that creates music according to your mood

The technology turns emotions into “a musical representation that seamlessly and continuously adapts to the listener’s current emotional state”

Credit: Cavan Images/Alamy

A brain-computer interface which interprets the moods of those wearing it as music is being developed with the intention of helping users with emotion mediation.

Scientists Stefan Ehrlich from the Technische Universität München and Kat Agres from the National University of Singapore described the device in an interview with the EveryONE blog, published by open access scientific journal PLOS ONE.

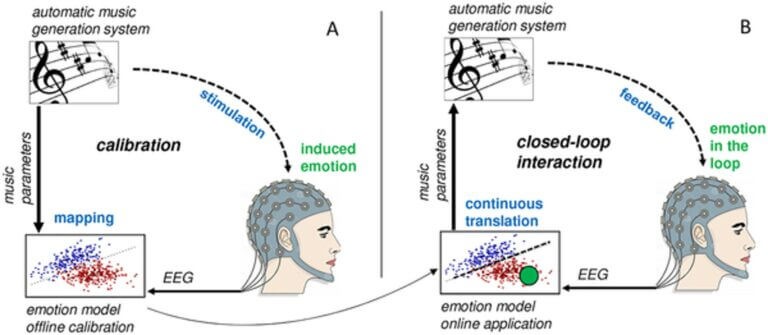

As the device generates music that reflects the user’s emotional state, the user can then actively ‘interact’ with their emotions through the music. As Ehrlich describes, the device “translates the listener’s brain activity, corresponding to a specific emotional state, into a musical representation that seamlessly and continuously adapts to the listener’s current emotional state.”

The listener is made aware of their emotional state through the ever-evolving music generated by the device, and this is how they can then mediate their emotions.

The scientists have already put their device to the test with a group of young people suffering from depression “who actually think of their identity in part in terms of their music,” as Agres describes.

While the group was divided on the ease of use of the device, Agres reported that “without telling the listeners how to gain control over the feedback […] all of them reported that they self-evoked emotions by thinking about happy/sad moments in their life,” to bring their emotional state under control.

He continued, “I want to emphasise that the system triggered people to engage with their memories and with their emotions in order to make the music feedback change. I was surprised that all of the subjects chose this strategy.”

The duo went on to describe the various challenges to their project, including the challenge of making a device that continually generates music based on emotional state sound continuous, and not merely sounds indicating mood. Agres noted the need for flexibility to react to brain state changes in real-time, stating the device had to adapt “to their brain signals and sound continuous and musically cohesive.”

Agres and Ehrlich are currently about to launch the second batch of tests for their automatic music generation system with healthy adults as well as patients suffering from depression. Should their tests prove successful, they plan on using the device to help stroke patients battling depression.

The potential of a technology that can proverbially read minds and create music with it is definitely an exciting one, and we’re certainly curious if musicians will be allowed to access this technology one day to hear how the music in their minds. Who knows, if the technology is pushed far enough, maybe we’ll be able to transcribe the music in our heads without the need to pick up an instrument.